Introduction

Nowadays, there are many companies offering voice assistants and other voice intelligence solutions, and it can be challenging to navigate this newly-crowded market. The goal of this article is to guide you through the various voice-based tech solutions available and their inherent differences so that you can pick the most suitable option for your organization’s needs.

In this guide, we’ll go over the following items:

- The technology behind a Voice AI solution and all of its components, such as ASR, SLU, and TTS.

- The factors that make voice conversations challenging for voicebots, such as urgency and latency, spoken language imperfections, and environmental challenges.

- What makes a Voice AI vendor truly “voice-first.”

In the last section of this guide, we’ve outlined the main categories of vendors offering Voice AI solutions and the challenges you might encounter when engaging with them:

- Telephony and ARM companies

- Chat-first companies

- Conversational analytics companies

- Voice-first companies, whose primary focus is to develop and offer Voice AI solutions (e.g. Skit.ai)

The Technology and Mechanisms of a Typical Voicebot

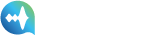

A Digital Voice Agent (Skit.ai’s core product) is a Voice AI-powered machine capable of conversing with consumers within a specific context in place. The graphical illustration below is a simplistic view of the various parts that work together, in synchronicity, for the smooth functioning of the voicebot, in this instance Skit.ai’s Digital Voice Agent.

If you need a more exhaustive explanation of the functioning of a voicebot, please read this article for further understanding.

Telephony: This is the primary carrier of the Digital Voice Agent. Whenever a customer calls up a business, it is through telephony that the call reaches the Voice Agent (either deployed over the cloud or on-premise). There are various types of telephony providers; Skit.ai also provides an advanced cloud-telephony service, enabling even faster deployment times and flawless integration.

Typically a conversation with a voicebot involves the seamless flow of information, and here is how it happens:

The spoken word is transmitted through the telephony and reaches the first part of a voicebot, i.e. the Dialogue Manager, which orchestrates the flow of information in a voicebot. It also captures and maintains a lot of other information for example – it keeps a track of state, user signals (gender, etc.), environmental cues (like noise), and more.

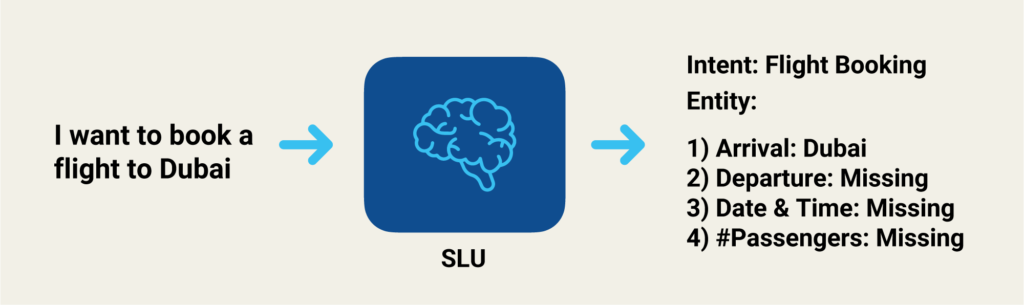

The Dialogue Manager directs the voice to the Automatic Speech Recognition (ASR) or Text to Speech (TTS) engine where the speech is converted into text or the voicebot will speak to the request information if needed.

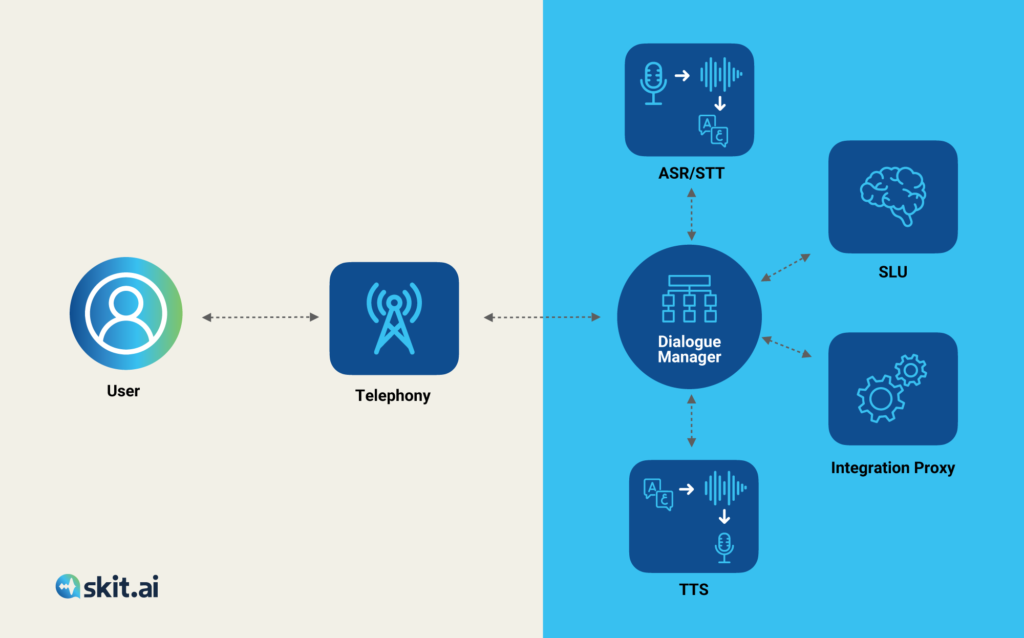

SLU: The text transcripts are then forwarded from ASR to the Spoken Language Understanding (SLU) engine, the brain of the voicebot, where:

- It cleans and pre-processes the data to get the underlying meaning,

- And then extracts the important information and data points from the ASR transcripts.

A good voicebot utilizes all the best ASR hypotheses (about the actual intent/meaning of the spoken sentence) to improve the performance of downstream SLU.

TTS: The Dialogue Manager comes into play again and fetches the right response for the customer based on the ongoing conversation. Text-to-speech (TTS) takes command from the Dialogue manager to convert the text into the audio file that will eventually be played for the caller to listen to.

Integration Proxy: Voice Agents talk with external systems such as CRM, Payment Gateways, Ticketing systems, etc., for personalization, validation, data fetching, etc. These are integration sockets that connect with external systems in order for voice agents to be effective and efficient in end-to-end automation.

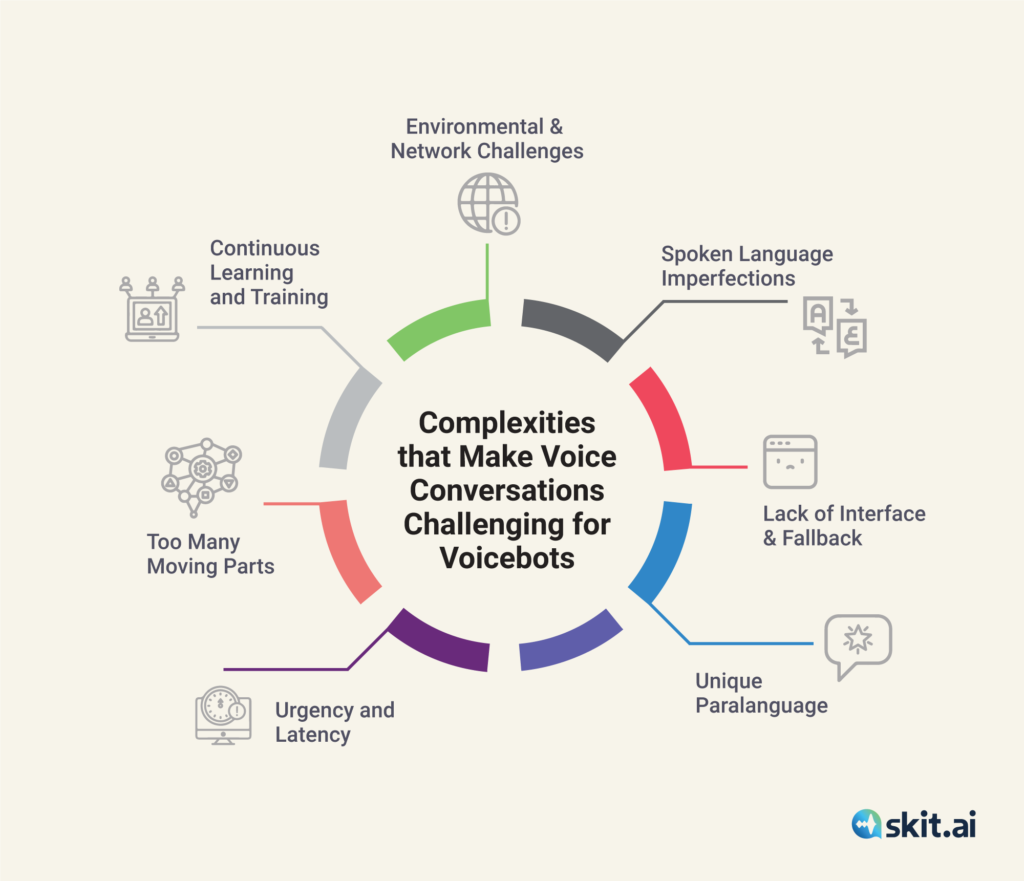

What Makes Voice Conversations Difficult for Voicebots

We now have an understanding of how a state-of-the-art voicebot works. But coming back to the questions on the significance of selecting the right vendor, we have to understand the nuances of voice — what makes it so challenging and more complex than chat or any other conversational or contact center solution?

Environmental & Network Challenges:

Unlike a chatbot, a voicebot has to face interference from environmental activities and has to overcome them to deliver quality conversations.

- Background Noise: Inherent to voice conversations is the problem of background noise; it can be of different types:

- Environmental noise

- Multiple speakers in the background

- And extraneous speech signals such as the speaker’s biological activities

In order for the SLU to identify intent and entities precisely, ASR should be able to differentiate the speaker’s voice from background noise and transcribe accurately. On the other hand, chatbots get clean textual data to work on and do not face this issue.

- Low-quality Audio Data from Telephony: Typically, a telephony transmission involves low-quality audio data, and there is a limit to how much one can pre-process the data.

- Spoken Language Imperfections:

- User Correction: Often in real-life conversations we speak first and then correct in case of mistakes, for instance: the answer to the question – for how many people do we need to book the table? – “I need a table for 4… no 5 people” This can be very confusing for the voicebot. Or even the answer – 4-5 people can be construed as 45, hence SLU needs to be good to decipher the real intent.

- Small Talks: Many times during actual conversations, the consumers ask the voicebot to ‘hold on for a sec’, delaying their response due to an urgent issue. Such, and similar situations add to the complexity of conversations.

- Barge-in: Voicebots work perfectly when both parties wait for their turn to speak, and do not barge in while the other is speaking. But in the real world, customers speak while the voicebot is completing its message. This creates complexity and errors in communication.

Language Mixing and Switching: The speaker may decide to switch between languages or even mix them. For the voicebot, it creates difficulty in comprehending the message and in language selection while replying. Chatbot, on the other hand, gets clean text data so it does not deal with the vagaries of spoken communication, as people are more thoughtful while writing.

Lack of Interface & Fallback: Typically in a chat window, when the chatbot does not understand an answer, it gives other options to the person. In a voicebot, there is no option to fall back, hence it makes the voice difficult to perfect.

Unique Paralanguage: The message encoded in speech can be truly understood by analyzing both linguistic and paralinguistic elements. More than the words, the unique combination of prosody, pitch, volume, and intonation of a person helps in decoding the real message.

Urgency and Latency:

Calling is usually either the last resort or the preferred modality for urgent matters, so expectations are sky high. Hence for preserving or augmenting the brand equity, customer support must work like a charm. Else it will have a lasting negative impression on the brand. On the contrary, if you reply to a chat after 30 seconds, it won’t hamper the conversational experience whereas the voice conversation is in real-time. Skit.ai’s Digital Voice Agent responds within a second, but, unlike chat, it can not wait for the customer for half an hour.

Too Many Moving Parts: A system is as good as its weakest link. Dependence on external party solutions makes management more challenging and limits the control a vendor has over voicebot performance. For instance, ASR, TTS, SLU, etc., which are advanced technologies in themselves, require a dedicated team responsible for the proper functioning.

Continuous Learning and Training: Conversational AI is not a magic pill that you take once, and you are done. Over time, changes in your customer behavior would necessitate optimization of your product mix and thus you need a dedicated team and bandwidth to keep it improving with time. Constant efforts have two consequences – one is the focus on upgrades and the other is the learning curve advantages that come with time.

Types of Vendors in the Voice AI Space

Coming back to our original discussion of the different types of vendors in the space, there are mainly four types of vendors that provide AI-powered Digital Voice Agents. We’ve outlined them below with their respective limitations.

Telephony and CRM Vendors Trying to Enter the Voice Space

Telephony and CRM vendors usually have IVR as one of their offerings. This enables synergy in their sales operations and utilizes their existing customer base to cross-sell the voice AI solution. To make this possible they collaborate with small vendors or white-label the solution along with utilizing the existing open-source tech (e.g. Google, Azure, Amazon, etc.) designed for simplistic horizontal problems in single-turn conversations, rather than complex ones.

Problems and challenges while engaging with such vendors:

- Low Ownership and Responsibility: Since it is not their primary revenue-earning business they are not seriously invested.

- High Reliance on Third-party Services: When a vendor relies heavily on third-party solutions, the control it has over the entire process gets compromised, unless it has its own tech stack working in sync. For example, Google’s ASR API has very low accuracy for short-utterances such as yes, no, right, wrong, etc. And if your use-case requires handling such conversations, one needs to have its ASR to notch up the performance.

- Constant Effort and Training: Any AI application requires constant effort in terms of maintenance and upgrades. A company that is not AI or voice-first will never have the resources to do this in the long term, a major disadvantage.

Chat-first Companies Trying to Get into Voice AI

The chatbot does not require ASR and TTS blocks as chatbots get the input in textual format and responses are also in text format. So they just need the NLU block.

These chat-first companies try to utilize their existing chat-first platform’s NLU by utilizing the third-party ASR and TTS engines.

Chat-first Voicebot = ASR + TTS (third party) + NLU

Here a chat-first voicebot will use a third-party ASR and TTS, that will give its chatbot the ability to speak and understand the spoken word. But since it is based on NLU, it will not be able to capture the essence and nuances of the speech we discussed earlier.

SLU vs. NLU: Without SLU, NLU might treat the ASR transcriptions without considering the speech imperfections we discussed earlier. For example, in the case of debt collection, if someone says, “I can pay only six-to-seven hundred this month, not more”. We need to understand the context and underlying meaning that the user wants to pay anywhere between $600 and $700 and not $62700. Such nuances can only be addressed by SLU, and hence its indispensable significance.

Oftentimes transcripts from ASR are corrupted due to noise, differences in accents, etc. NLU systems are trained on the perfect text and often cannot deal with the imperfections present in ASR transcripts. In a voice-first stack, ASR imperfections are taken into account while designing the SLU.

Challenges while engaging with such vendors:

- Expect more failures with chat-first voicebots, as it is at best a patchwork, a ragtag coalition of most easy, and cheap technologies.

- Low ownership as the voice-tech solution is not their primary revenue-earning business.

- High reliance on external third-party services (as explained in the above section).

- Not Being Voice-first: an AI application needs constant effort to remain accurate and updated. A company that is not voice-first will struggle to catch up as it can not dedicate a team and the solutions will perpetually be an underperformer.

How to spot such vendors: It is difficult for companies to decide which is a voice-first company and which is chat-first, so here are a few tips to separate the wheat from the chaff:

- Look at the Revenue Split: If the vendor claims to be a voice-first company, but has a majority of revenues coming from chat, text services, or other products then it is not a voice-first company.

- Proprietary Tech Stack: Look into the scope of their proprietary technologies, it gives a clear view of the seriousness of being voice-first. If for everything they are using third-party applications such as Google, Amazon, and Siri, they are not serious voice vendors and are just experimenting to get additional revenue sources.

- Voice Team Size: Another valuable insight can come out of analyzing their voice team size. A chat-first company will not typically devote a significant part of its team to voice.

- Voice Road Map: A company of the ilk of Skit.ai will always have a tech roadmap of the features they are going to release, the impact that will have and how is their R&D going to innovate for being future-proof.

Additionally, we are now starting to see also an additional type of vendor — conversational analytics companies entering the Voice AI space.

Why Choose Voice-first Companies or Vertical AI companies?

One important thing that is evidently clear at this point is that voice conversations are more challenging than they seem, there is so much more than meets the eye.

- High Ownership: The entire organization of a voice-first company is streamlined to deliver and own the outcomes of their voicebot. There are no distractions, only a razor-sharp area of focus. This makes their projects most likely to succeed and deliver transformative outcomes.

- Deep Domain Knowledge: A voicebot is a symphony, an orchestra of technologies working in tandem with each other to deliver the intelligent, fluid, and human-like conversations that every consumer covets. Only voice-first companies that labor hard to make every part function smoothly, and efficiently will be the ones delivering outcomes with maximum CX and RoI.

- Proprietary Tech Stack: Not that voice-first companies don’t utilize the third-party stack, they leverage them to further performance and control. They tune third-party tech stack and use it along with their existing proprietary tech to maximize the impact. For example, a company such as Skit.ai uses Google, Amazon, or Azure’s ASR along with its own domain-specific ASR parallelly to get the highest accuracy and optimal performance. The results are tangible and impressive. As Skit.ai’s ASR is significantly better at short-utterance, at instances where the conversational experts expect them, Skit.ai’s ASR kicks in for higher accuracy and performance.

- Dedicated Team: Running an AI-first product comes with its own challenges. But for a company like Skit.ai, which has a dedicated team of 400-500 people laboring to solve just the voice conundrum, you can expect an outstanding product that is always further along on the learning curve and stands true to its promises.

- Long-term Engagement: Voice is the future of customer support. No other modality will come close, especially with the blazing advancements in Voice AI. So, a voice solution must not be implemented with a very narrow view of time and cost. Deeply committed Voice AI vendors will be the ones to seek as they will deliver superior results that not only help companies save costs but also aid them in carving out an exceptional voice strategy for brand differentiation.

For further information on Voice AI solutions and implementations, feel free to book a call with one of our experts using the chat tool below.